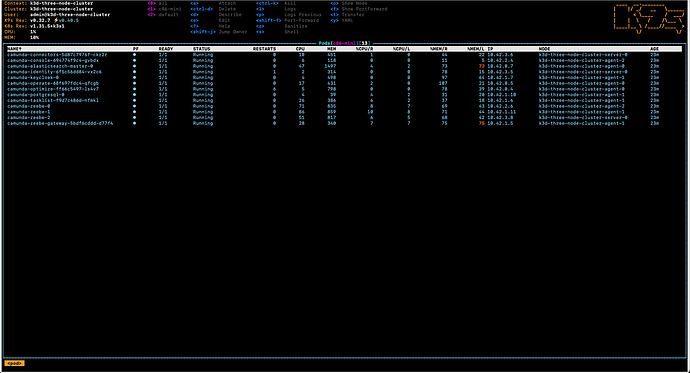

I have deployed camunda helm v 11.1.1 self managed instance with separate ingress on gke cluster - My operate, identity and tasklist components are not running -

We are getting error like below -

io.camunda.operate.archiver.Archiver - INIT: Start archiving data...

2025-02-11 18:27:23.826 [] [main] [] WARN

io.camunda.operate.zeebe.PartitionHolder - Error occurred when requesting partition ids from Zeebe client: Network closed for unknown reason

io.camunda.zeebe.client.api.command.ClientStatusException: Network closed for unknown reason

at io.camunda.zeebe.client.impl.ZeebeClientFutureImpl.transformExecutionException(ZeebeClientFutureImpl.java:116) ~[zeebe-client-java-8.6.7.jar:8.6.7]

at io.camunda.zeebe.client.impl.ZeebeClientFutureImpl.join(ZeebeClientFutureImpl.java:54) ~[zeebe-client-java-8.6.7.jar:8.6.7]

at io.camunda.webapps.zeebe.StandalonePartitionSupplier.getPartitionsCount(StandalonePartitionSupplier.java:23) ~[camunda-zeebe-8.6.7.jar:8.6.7]

at io.camunda.operate.zeebe.PartitionHolder.getPartitionIdsFromZeebe(PartitionHolder.java:109) ~[operate-common-8.6.7.jar:8.6.7]

at io.camunda.operate.zeebe.PartitionHolder.getPartitionIdsWithWaitingTimeAndRetries(PartitionHolder.java:67) ~[operate-common-8.6.7.jar:8.6.7]

at io.camunda.operate.zeebe.PartitionHolder.getPartitionIds(PartitionHolder.java:50) ~[operate-common-8.6.7.jar:8.6.7]

at io.camunda.operate.archiver.Archiver.startArchiving(Archiver.java:49) ~[operate-archiver-8.6.7.jar:8.6.7]

at java.base/jdk.internal.reflect.DirectMethodHandleAccessor.invoke(DirectMethodHandleAccessor.java:103) ~[?:?]

at java.base/java.lang.reflect.Method.invoke(Method.java:580) ~[?:?]

at org.springframework.beans.factory.annotation.InitDestroyAnnotationBeanPostProcessor$LifecycleMethod.invoke(InitDestroyAnnotationBeanPostProcessor.java:457) ~[spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.annotation.InitDestroyAnnotationBeanPostProcessor$LifecycleMetadata.invokeInitMethods(InitDestroyAnnotationBeanPostProcessor.java:401) ~[spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.annotation.InitDestroyAnnotationBeanPostProcessor.postProcessBeforeInitialization(InitDestroyAnnotationBeanPostProcessor.java:219) ~[spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.applyBeanPostProcessorsBeforeInitialization(AbstractAutowireCapableBeanFactory.java:422) ~[spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.initializeBean(AbstractAutowireCapableBeanFactory.java:1798) ~[spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.doCreateBean(AbstractAutowireCapableBeanFactory.java:600) ~[spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.support.AbstractAutowireCapableBeanFactory.createBean(AbstractAutowireCapableBeanFactory.java:522) ~[spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.support.AbstractBeanFactory.lambda$doGetBean$0(AbstractBeanFactory.java:337) ~[spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.support.DefaultSingletonBeanRegistry.getSingleton(DefaultSingletonBeanRegistry.java:234) [spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.support.AbstractBeanFactory.doGetBean(AbstractBeanFactory.java:335) [spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.support.AbstractBeanFactory.getBean(AbstractBeanFactory.java:200) [spring-beans-6.1.14.jar:6.1.14]

at org.springframework.beans.factory.support.DefaultListableBeanFactory.preInstantiateSingletons(DefaultListableBeanFactory.java:975) [spring-beans-6.1.14.jar:6.1.14]

at org.springframework.context.support.AbstractApplicationContext.finishBeanFactoryInitialization(AbstractApplicationContext.java:971) [spring-context-6.1.14.jar:6.1.14]

at org.springframework.context.support.AbstractApplicationContext.refresh(AbstractApplicationContext.java:625) [spring-context-6.1.14.jar:6.1.14]

at org.springframework.boot.web.servlet.context.ServletWebServerApplicationContext.refresh(ServletWebServerApplicationContext.java:146) [spring-boot-3.3.7.jar:3.3.7]

at org.springframework.boot.SpringApplication.refresh(SpringApplication.java:754) [spring-boot-3.3.7.jar:3.3.7]

at org.springframework.boot.SpringApplication.refreshContext(SpringApplication.java:456) [spring-boot-3.3.7.jar:3.3.7]

at org.springframework.boot.SpringApplication.run(SpringApplication.java:335) [spring-boot-3.3.7.jar:3.3.7]

at io.camunda.application.StandaloneOperate.main(StandaloneOperate.java:53) [camunda-zeebe-8.6.7.jar:8.6.7]

Caused by: java.util.concurrent.ExecutionException: io.grpc.StatusRuntimeException: UNAVAILABLE: Network closed for unknown reason

at java.base/java.util.concurrent.CompletableFuture.reportGet(CompletableFuture.java:396) ~[?:?]

at java.base/java.util.concurrent.CompletableFuture.get(CompletableFuture.java:2073) ~[?:?]

at io.camunda.zeebe.client.impl.ZeebeClientFutureImpl.join(ZeebeClientFutureImpl.java:52) ~[zeebe-client-java-8.6.7.jar:8.6.7]

... 26 more

Caused by: io.grpc.StatusRuntimeException: UNAVAILABLE: Network closed for unknown reason

at io.grpc.Status.asRuntimeException(Status.java:532) ~[grpc-api-1.68.2.jar:1.68.2]

at io.grpc.stub.ClientCalls$StreamObserverToCallListenerAdapter.onClose(ClientCalls.java:481) ~[grpc-stub-1.68.2.jar:1.68.2]

at io.grpc.internal.DelayedClientCall$DelayedListener$3.run(DelayedClientCall.java:489) ~[grpc-core-1.68.2.jar:1.68.2]

at io.grpc.internal.DelayedClientCall$DelayedListener.delayOrExecute(DelayedClientCall.java:453) ~[grpc-core-1.68.2.jar:1.68.2]

at io.grpc.internal.DelayedClientCall$DelayedListener.onClose(DelayedClientCall.java:486) ~[grpc-core-1.68.2.jar:1.68.2]

at io.grpc.internal.ClientCallImpl.closeObserver(ClientCallImpl.java:564) ~[grpc-core-1.68.2.jar:1.68.2]

at io.grpc.internal.ClientCallImpl.access$100(ClientCallImpl.java:72) ~[grpc-core-1.68.2.jar:1.68.2]

at io.grpc.internal.ClientCallImpl$ClientStreamListenerImpl$1StreamClosed.runInternal(ClientCallImpl.java:729) ~[grpc-core-1.68.2.jar:1.68.2]

at io.grpc.internal.ClientCallImpl$ClientStreamListenerImpl$1StreamClosed.runInContext(ClientCallImpl.java:710) ~[grpc-core-1.68.2.jar:1.68.2]

at io.grpc.internal.ContextRunnable.run(ContextRunnable.java:37) ~[grpc-core-1.68.2.jar:1.68.2]

at io.grpc.internal.SerializingExecutor.run(SerializingExecutor.java:133) ~[grpc-core-1.68.2.jar:1.68.2]

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1144) ~[?:?]

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:642) ~[?:?]

at java.base/java.lang.Thread.run(Thread.java:1583) ~[?:?]

2025-02-11 18:27:23.844 [] [main] [] INFO

io.camunda.operate.zeebe.PartitionHolder - Partition ids can't be fetched from Zeebe. Try next round (1).

2025-02-11 18:27:24.845 [] [main] [] WARN

io.camunda.operate.zeebe.PartitionHolder - Error occurred when requesting partition ids from Zeebe client: Network closed for unknown reason

io.camunda.zeebe.client.api.command.ClientStatusException: Network closed for unknown reason

Not able to find the root cause of the issue.