Hi,

I am facing issues with setting up Camunda 8 environment locally.

I am using the attached docker-compose file

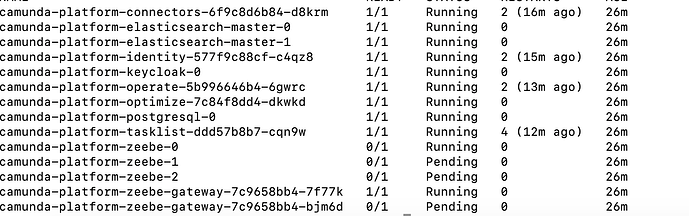

All pods are successfully running except the 4 missing pods for Zeebe.

I attached the output of describe pod of one of them (all are the same)

docker-compose (1).yaml (15.1 KB)

Name: camunda-platform-zeebe-1

Namespace: default

Priority: 0

Service Account: default

Node:

Labels: app=camunda-platform

app.kubernetes.io/component=zeebe-broker

app.kubernetes.io/instance=camunda-platform

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=camunda-platform

app.kubernetes.io/part-of=camunda-platform

app.kubernetes.io/version=8.3.1

controller-revision-hash=camunda-platform-zeebe-5f8f94896d

helm.sh/chart=camunda-platform-8.3.1

statefulset.kubernetes.io/pod-name=camunda-platform-zeebe-1

Annotations:

Status: Pending

IP:

IPs:

Controlled By: StatefulSet/camunda-platform-zeebe

Containers:

zeebe:

Image: camunda/zeebe:8.3.1

Ports: 9600/TCP, 26501/TCP, 26502/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

Limits:

cpu: 960m

memory: 1920Mi

Requests:

cpu: 800m

memory: 1200Mi

Readiness: http-get http://:9600/ready delay=30s timeout=1s period=30s #success=1 #failure=5

Environment:

LC_ALL: C.UTF-8

K8S_NAME: camunda-platform-zeebe-1 (v1:metadata.name)

K8S_SERVICE_NAME: camunda-platform-zeebe

K8S_NAMESPACE: default (v1:metadata.namespace)

ZEEBE_BROKER_NETWORK_ADVERTISEDHOST: $(K8S_NAME).$(K8S_SERVICE_NAME).$(K8S_NAMESPACE).svc

ZEEBE_BROKER_CLUSTER_INITIALCONTACTPOINTS: $(K8S_SERVICE_NAME)-0.$(K8S_SERVICE_NAME).$(K8S_NAMESPACE).svc:26502, $(K8S_SERVICE_NAME)-1.$(K8S_SERVICE_NAME).$(K8S_NAMESPACE).svc:26502, $(K8S_SERVICE_NAME)-2.$(K8S_SERVICE_NAME).$(K8S_NAMESPACE).svc:26502,

ZEEBE_BROKER_CLUSTER_CLUSTERNAME: camunda-platform-zeebe

ZEEBE_LOG_LEVEL: info

ZEEBE_BROKER_CLUSTER_PARTITIONSCOUNT: 3

ZEEBE_BROKER_CLUSTER_CLUSTERSIZE: 3

ZEEBE_BROKER_CLUSTER_REPLICATIONFACTOR: 3

ZEEBE_BROKER_THREADS_CPUTHREADCOUNT: 3

ZEEBE_BROKER_THREADS_IOTHREADCOUNT: 3

ZEEBE_BROKER_GATEWAY_ENABLE: false

ZEEBE_BROKER_EXPORTERS_ELASTICSEARCH_CLASSNAME: io.camunda.zeebe.exporter.ElasticsearchExporter

ZEEBE_BROKER_EXPORTERS_ELASTICSEARCH_ARGS_URL: http://camunda-platform-elasticsearch:9200

ZEEBE_BROKER_EXPORTERS_ELASTICSEARCH_ARGS_INDEX_PREFIX: zeebe-record

ZEEBE_BROKER_NETWORK_COMMANDAPI_PORT: 26501

ZEEBE_BROKER_NETWORK_INTERNALAPI_PORT: 26502

ZEEBE_BROKER_NETWORK_MONITORINGAPI_PORT: 9600

K8S_POD_NAME: camunda-platform-zeebe-1 (v1:metadata.name)

JAVA_TOOL_OPTIONS: -XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/usr/local/zeebe/data -XX:ErrorFile=/usr/local/zeebe/data/zeebe_error%p.log -XX:+ExitOnOutOfMemoryError

ZEEBE_BROKER_DATA_SNAPSHOTPERIOD: 5m

ZEEBE_BROKER_DATA_DISK_FREESPACE_REPLICATION: 2GB

ZEEBE_BROKER_DATA_DISK_FREESPACE_PROCESSING: 3GB

Mounts:

/exporters from exporters (rw)

/tmp from tmp (rw)

/usr/local/bin/startup.sh from config (rw,path=“startup.sh”)

/usr/local/zeebe/data from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-lnmsz (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

data:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: data-camunda-platform-zeebe-1

ReadOnly: false

config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: camunda-platform-zeebe

Optional: false

exporters:

Type: EmptyDir (a temporary directory that shares a pod’s lifetime)

Medium:

SizeLimit:

tmp:

Type: EmptyDir (a temporary directory that shares a pod’s lifetime)

Medium:

SizeLimit:

kube-api-access-lnmsz:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional:

DownwardAPI: true

QoS Class: Burstable

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

Warning FailedScheduling 3m7s (x8 over 28m) default-scheduler 0/1 nodes are available: 1 node(s) didn’t match pod anti-affinity rules. preemption: 0/1 nodes are available: 1 No preemption victims found for incoming pod…