Hi there,

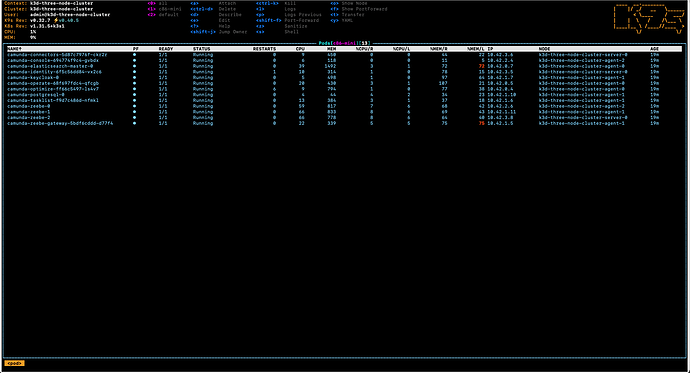

Is multiple broker nodes (clusterSize 3 or more) supported in Local Kubernetes based installation?

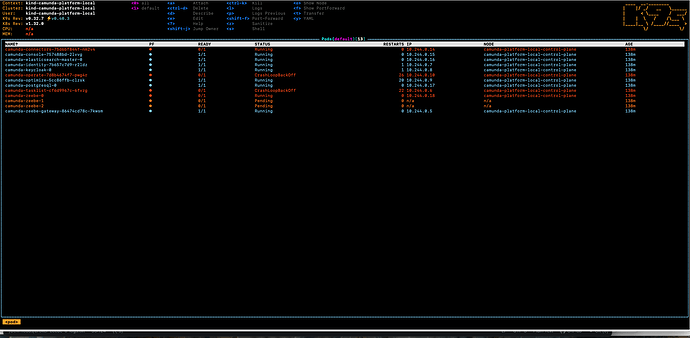

I have successfully tested when configuration is set to 1, but see errors when I tweak the size to 3.

Any suggestions?

Attached is the values yaml.

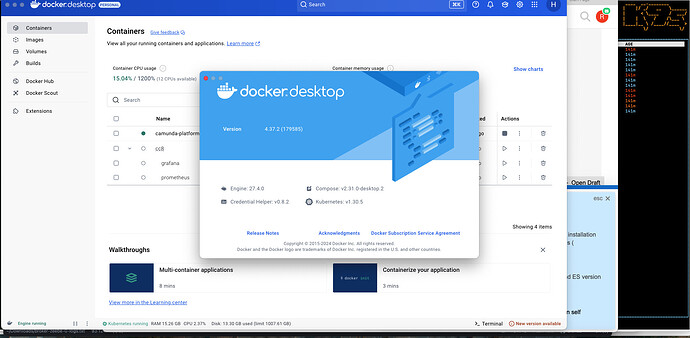

Docker desktop

Helm chart: 11.2.1

Tried below config. (using the yaml file)

clusterSize: 1, partitionCount: 5, replicationFactor: 1 => works fine

clusterSize: 3, partitionCount: 5, replicationFactor: 1 => errors

Error details: Logs(default/camunda-operate-7d8b4674f7-pwg4r):

2025-02-17 13:01:08.638 [main] INFO │

│ io.camunda.operate.zeebe.PartitionHolder - Partition ids can’t be fetched from Zeebe. Try next round (55). │

│ 2025-02-17 13:01:09.640 [main] WARN │

│ io.camunda.zeebe.client.impl.ZeebeCallCredentials - The request’s security level does not guarantee that the credentials will be confidential. │

│ 2025-02-17 13:01:09.642 [main] INFO │

│ io.camunda.operate.zeebe.PartitionHolder - Partition ids can’t be fetched from Zeebe. Try next round (56). │

│ 2025-02-17 13:01:10.643 [main] WARN │

│ io.camunda.zeebe.client.impl.ZeebeCallCredentials - The request’s security level does not guarantee that the credentials will be confidential. │

│ 2025-02-17 13:01:10.645 [main] INFO │

│ io.camunda.operate.zeebe.PartitionHolder - Partition ids can’t be fetched from Zeebe. Try next round (57). │

│ 2025-02-17 13:01:11.647 [main] WARN │

│ io.camunda.zeebe.client.impl.ZeebeCallCredentials - The request’s security level does not guarantee that the credentials will be confidential. │

│ 2025-02-17 13:01:11.649 [main] INFO │

│ io.camunda.operate.zeebe.PartitionHolder - Partition ids can’t be fetched from Zeebe. Try next round (58). │

│ 2025-02-17 13:01:12.651 [main] WARN │

│ io.camunda.zeebe.client.impl.ZeebeCallCredentials - The request’s security level does not guarantee that the credentials will be confidential. │

│ 2025-02-17 13:01:12.653 [main] INFO │

│ io.camunda.operate.zeebe.PartitionHolder - Partition ids can’t be fetched from Zeebe. Try next round (59). │

│ 2025-02-17 13:01:13.656 [main] WARN

│ io.camunda.zeebe.client.impl.ZeebeCallCredentials - The request’s security level does not guarantee that the credentials will be confidential. │

│ 2025-02-17 13:01:13.664 [main] INFO

│ io.camunda.operate.zeebe.PartitionHolder - Partition ids can’t be fetched from Zeebe. Try next round (60).

Similar error is also seen in the Tasklist logs: Logs(default/camunda-tasklist-cf6d9967c-6fvzg)

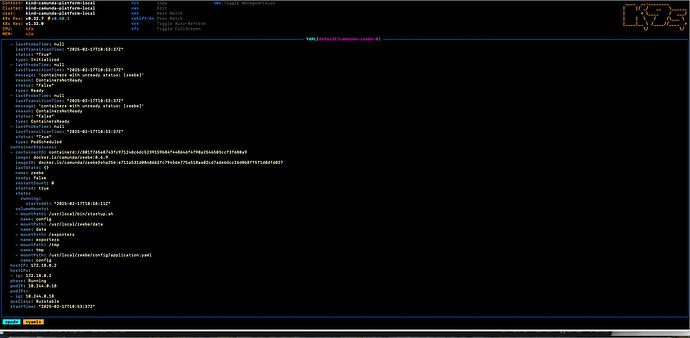

Also seeing these in the broker logs (Camunda-zeebe-0).

io.atomix.cluster.protocol.swim.probe - 0 - Failed all probes of Member{id=camunda-tasklist-cf6d9967c-6fvzg, address=10.244.0.6:26502, properties={event-service-topics-subscribed=KIIDAGpvYnNBdmFpbGFibOU=}}. Marking as suspect.

2025-02-17 13:01:41.332 [Broker-0] [zb-actors-1] ] WARN

io.camunda.zeebe.dynamic.config.gossip.ClusterConfigurationGossiper - Failed to sync with camunda-tasklist-cf6d9967c-6fvzg

java.util.concurrent.CompletionException: java.net.ConnectException: Failed to connect channel for address 10.244.0.6:26502 (resolved: null) : io.netty.channel.AbstractChannel$AnnotatedConnectException: Connection refused: /10.244.0.6:26502

Caused by: io.atomix.cluster.messaging.MessagingException$NoRemoteHandler: No remote message handler registered for this message, subject cluster-topology-sync

… 32 more

2025-02-17 13:06:20.374 [Broker-0] [zb-actors-2] ] WARN

io.camunda.zeebe.dynamic.config.gossip.ClusterConfigurationGossiper - Failed to sync with camunda-operate-7d8b4674f7-pwg4r

java.util.concurrent.CompletionException: io.atomix.cluster.messaging.MessagingException$NoRemoteHandler: No remote message handler registered for this message, subject cluster-topology-sync

Values yaml file: attached

values-combined-ingress.yaml (5.7 KB)

Thanks in advance.