I am using camunda 8 self-managed and not able to figure out why my operate component is getting restarted and issue is happing after I configured existing OpenSearch.

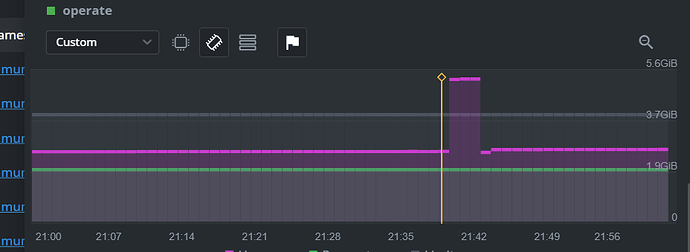

it is getting restarted randomly and with only 22 process Instance it’s memory consumption is 2.8 GB and sometimes it’s giving me heap out of space error also tried to figuring out what should configure for using operate in very healthy state

this is my operate configuration

apiVersion: apps/v1

kind: Deployment

metadata:

name: camunda-platform-operate

namespace: camunda

uid: 7e795760-cb45-4f6c-b611-a44978174052

resourceVersion: '5695766'

generation: 16

creationTimestamp: '2024-09-21T13:47:47Z'

labels:

app: camunda-platform

app.kubernetes.io/component: operate

app.kubernetes.io/instance: camunda-platform

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: camunda-platform

app.kubernetes.io/part-of: camunda-platform

app.kubernetes.io/version: 8.5.0

helm.sh/chart: camunda-platform-10.0.4

k8slens-edit-resource-version: v1

annotations:

deployment.kubernetes.io/revision: '13'

meta.helm.sh/release-name: camunda-platform

meta.helm.sh/release-namespace: camunda

managedFields:

- manager: node-fetch

operation: Update

apiVersion: apps/v1

fieldsType: FieldsV1

fieldsV1:

f:spec:

f:replicas: {}

subresource: scale

- manager: helm

operation: Update

apiVersion: apps/v1

time: '2024-09-21T13:47:47Z'

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:meta.helm.sh/release-name: {}

f:meta.helm.sh/release-namespace: {}

f:labels:

.: {}

f:app: {}

f:app.kubernetes.io/component: {}

f:app.kubernetes.io/instance: {}

f:app.kubernetes.io/managed-by: {}

f:app.kubernetes.io/name: {}

f:app.kubernetes.io/part-of: {}

f:app.kubernetes.io/version: {}

f:helm.sh/chart: {}

f:spec:

f:progressDeadlineSeconds: {}

f:revisionHistoryLimit: {}

f:selector: {}

f:strategy:

f:rollingUpdate:

.: {}

f:maxSurge: {}

f:maxUnavailable: {}

f:type: {}

f:template:

f:metadata:

f:annotations:

.: {}

f:checksum/config: {}

f:labels:

.: {}

f:app: {}

f:app.kubernetes.io/component: {}

f:app.kubernetes.io/instance: {}

f:app.kubernetes.io/managed-by: {}

f:app.kubernetes.io/name: {}

f:app.kubernetes.io/part-of: {}

f:app.kubernetes.io/version: {}

f:helm.sh/chart: {}

f:spec:

f:containers:

k:{"name":"operate"}:

.: {}

f:env:

.: {}

k:{"name":"CAMUNDA_IDENTITY_CLIENT_SECRET"}:

.: {}

f:name: {}

f:valueFrom:

.: {}

f:secretKeyRef: {}

k:{"name":"CAMUNDA_OPERATE_OPENSEARCH_PASSWORD"}:

.: {}

f:name: {}

f:valueFrom:

.: {}

f:secretKeyRef: {}

k:{"name":"CAMUNDA_OPERATE_ZEEBE_OPENSEARCH_PASSWORD"}:

.: {}

f:name: {}

f:valueFrom:

.: {}

f:secretKeyRef: {}

k:{"name":"ZEEBE_AUTHORIZATION_SERVER_URL"}:

.: {}

f:name: {}

f:value: {}

k:{"name":"ZEEBE_CLIENT_CONFIG_PATH"}:

.: {}

f:name: {}

f:value: {}

k:{"name":"ZEEBE_CLIENT_ID"}:

.: {}

f:name: {}

f:value: {}

k:{"name":"ZEEBE_CLIENT_SECRET"}:

.: {}

f:name: {}

f:valueFrom:

.: {}

f:secretKeyRef: {}

k:{"name":"ZEEBE_TOKEN_AUDIENCE"}:

.: {}

f:name: {}

f:value: {}

f:envFrom: {}

f:image: {}

f:imagePullPolicy: {}

f:name: {}

f:ports:

.: {}

k:{"containerPort":8080,"protocol":"TCP"}:

.: {}

f:containerPort: {}

f:name: {}

f:protocol: {}

f:readinessProbe:

.: {}

f:failureThreshold: {}

f:httpGet:

.: {}

f:path: {}

f:port: {}

f:scheme: {}

f:initialDelaySeconds: {}

f:periodSeconds: {}

f:successThreshold: {}

f:timeoutSeconds: {}

f:resources:

.: {}

f:limits:

.: {}

f:cpu: {}

f:requests:

.: {}

f:cpu: {}

f:securityContext:

.: {}

f:allowPrivilegeEscalation: {}

f:privileged: {}

f:readOnlyRootFilesystem: {}

f:runAsNonRoot: {}

f:runAsUser: {}

f:terminationMessagePath: {}

f:terminationMessagePolicy: {}

f:volumeMounts:

.: {}

k:{"mountPath":"/camunda"}:

.: {}

f:mountPath: {}

f:name: {}

k:{"mountPath":"/tmp"}:

.: {}

f:mountPath: {}

f:name: {}

k:{"mountPath":"/usr/local/operate/config/application.yml"}:

.: {}

f:mountPath: {}

f:name: {}

f:subPath: {}

f:dnsPolicy: {}

f:restartPolicy: {}

f:schedulerName: {}

f:securityContext:

.: {}

f:fsGroup: {}

f:runAsNonRoot: {}

f:terminationGracePeriodSeconds: {}

f:volumes:

.: {}

k:{"name":"camunda"}:

.: {}

f:emptyDir: {}

f:name: {}

k:{"name":"config"}:

.: {}

f:configMap:

.: {}

f:defaultMode: {}

f:name: {}

f:name: {}

k:{"name":"tmp"}:

.: {}

f:emptyDir: {}

f:name: {}

- manager: manager

operation: Update

apiVersion: apps/v1

time: '2024-09-23T09:07:04Z'

fieldsType: FieldsV1

fieldsV1:

f:spec:

f:template:

f:metadata:

f:annotations:

f:cloudwatch.aws.amazon.com/auto-annotate-dotnet: {}

f:cloudwatch.aws.amazon.com/auto-annotate-java: {}

f:cloudwatch.aws.amazon.com/auto-annotate-nodejs: {}

f:cloudwatch.aws.amazon.com/auto-annotate-python: {}

f:instrumentation.opentelemetry.io/inject-dotnet: {}

f:instrumentation.opentelemetry.io/inject-java: {}

f:instrumentation.opentelemetry.io/inject-nodejs: {}

f:instrumentation.opentelemetry.io/inject-python: {}

- manager: node-fetch

operation: Update

apiVersion: apps/v1

time: '2024-09-25T10:26:00Z'

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:labels:

f:k8slens-edit-resource-version: {}

f:spec:

f:template:

f:spec:

f:containers:

k:{"name":"operate"}:

f:env:

k:{"name":"JAVA_OPTS"}:

.: {}

f:name: {}

f:value: {}

f:resources:

f:limits:

f:memory: {}

f:requests:

f:memory: {}

- manager: kube-controller-manager

operation: Update

apiVersion: apps/v1

time: '2024-09-25T10:56:07Z'

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

f:deployment.kubernetes.io/revision: {}

f:status:

f:availableReplicas: {}

f:conditions:

.: {}

k:{"type":"Available"}:

.: {}

f:lastTransitionTime: {}

f:lastUpdateTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

k:{"type":"Progressing"}:

.: {}

f:lastTransitionTime: {}

f:lastUpdateTime: {}

f:message: {}

f:reason: {}

f:status: {}

f:type: {}

f:observedGeneration: {}

f:readyReplicas: {}

f:replicas: {}

f:updatedReplicas: {}

subresource: status

selfLink: /apis/apps/v1/namespaces/camunda/deployments/camunda-platform-operate

status:

observedGeneration: 16

replicas: 3

updatedReplicas: 3

readyReplicas: 3

availableReplicas: 3

conditions:

- type: Progressing

status: 'True'

lastUpdateTime: '2024-09-25T10:26:31Z'

lastTransitionTime: '2024-09-23T10:48:00Z'

reason: NewReplicaSetAvailable

message: >-

ReplicaSet "camunda-platform-operate-7d7c867694" has successfully

progressed.

- type: Available

status: 'True'

lastUpdateTime: '2024-09-25T10:56:07Z'

lastTransitionTime: '2024-09-25T10:56:07Z'

reason: MinimumReplicasAvailable

message: Deployment has minimum availability.

spec:

replicas: 3

selector:

matchLabels:

app: camunda-platform

app.kubernetes.io/component: operate

app.kubernetes.io/instance: camunda-platform

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: camunda-platform

app.kubernetes.io/part-of: camunda-platform

template:

metadata:

creationTimestamp: null

labels:

app: camunda-platform

app.kubernetes.io/component: operate

app.kubernetes.io/instance: camunda-platform

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: camunda-platform

app.kubernetes.io/part-of: camunda-platform

app.kubernetes.io/version: 8.5.0

helm.sh/chart: camunda-platform-10.0.4

annotations:

checksum/config: c4cf51ae1f87a5ba3d9933a82f6d051362361d7c38b689af93c6751c7aed0e09

cloudwatch.aws.amazon.com/auto-annotate-dotnet: 'true'

cloudwatch.aws.amazon.com/auto-annotate-java: 'true'

cloudwatch.aws.amazon.com/auto-annotate-nodejs: 'true'

cloudwatch.aws.amazon.com/auto-annotate-python: 'true'

instrumentation.opentelemetry.io/inject-dotnet: 'true'

instrumentation.opentelemetry.io/inject-java: 'true'

instrumentation.opentelemetry.io/inject-nodejs: 'true'

instrumentation.opentelemetry.io/inject-python: 'true'

spec:

volumes:

- name: config

configMap:

name: camunda-platform-operate-configuration

defaultMode: 484

- name: tmp

emptyDir: {}

- name: camunda

emptyDir: {}

containers:

- name: operate

image: camunda/operate:8.5.0

ports:

- name: http

containerPort: 8080

protocol: TCP

envFrom:

- configMapRef:

name: camunda-platform-identity-env-vars

env:

- name: CAMUNDA_OPERATE_ZEEBE_OPENSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: camunda-platform-opensearch

key: password

- name: CAMUNDA_OPERATE_OPENSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: camunda-platform-opensearch

key: password

- name: CAMUNDA_IDENTITY_CLIENT_SECRET

valueFrom:

secretKeyRef:

name: camunda-platform-operate-identity-secret

key: operate-secret

- name: ZEEBE_CLIENT_ID

value: zeebe

- name: ZEEBE_CLIENT_SECRET

valueFrom:

secretKeyRef:

name: camunda-platform-zeebe-identity-secret

key: zeebe-secret

- name: ZEEBE_AUTHORIZATION_SERVER_URL

value: >-

http://camunda-platform-keycloak:80/auth/realms/camunda-platform/protocol/openid-connect/token

- name: ZEEBE_TOKEN_AUDIENCE

value: zeebe-api

- name: ZEEBE_CLIENT_CONFIG_PATH

value: /tmp/zeebe_auth_cache

- name: JAVA_OPTS

value: '-Xmx4g -Xms2048m -XX:+PrintGCDetails'

resources:

limits:

cpu: '2'

memory: 4Gi

requests:

cpu: 600m

memory: 2Gi

volumeMounts:

- name: config

mountPath: /usr/local/operate/config/application.yml

subPath: application.yml

- name: tmp

mountPath: /tmp

- name: camunda

mountPath: /camunda

readinessProbe:

httpGet:

path: /operate/actuator/health/readiness

port: http

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 1

periodSeconds: 30

successThreshold: 1

failureThreshold: 5

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

imagePullPolicy: IfNotPresent

securityContext:

privileged: false

runAsUser: 1001

runAsNonRoot: true

readOnlyRootFilesystem: true

allowPrivilegeEscalation: false

restartPolicy: Always

terminationGracePeriodSeconds: 30

dnsPolicy: ClusterFirst

securityContext:

runAsNonRoot: true

fsGroup: 1001

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

revisionHistoryLimit: 10

progressDeadlineSeconds: 600