Hi Guys,

I’ve tried searching the docs for possible leads on how to size the Camunda Backend DBs (runtime & history) but I haven’t seen any direct recommendations or formula for calculation. I know it depends on certain factors such as History TTL, the average process instance cycle time and the number of PI variables.

So I am reaching out maybe you can recommend something based on experience, like is the runtime db usually 1:1 with the History DB? or there is a rule of thumb proportion.

Thanks! any leads or recommendations would be helpful.

Hi @edcapulong,

have a look at the best practice about sizing: Sizing Your Camunda 7 Environment | Camunda Cloud Docs

Hope this helps, Ingo

1 Like

Hi @Ingo_Richtsmeier !

Thank you for the response as always, after reading the docs it seems like it would be really hard to get the sizing initially without performing actual load performance tests. But if I may ask, how do you usually compute the size per Process Instance (PI)?

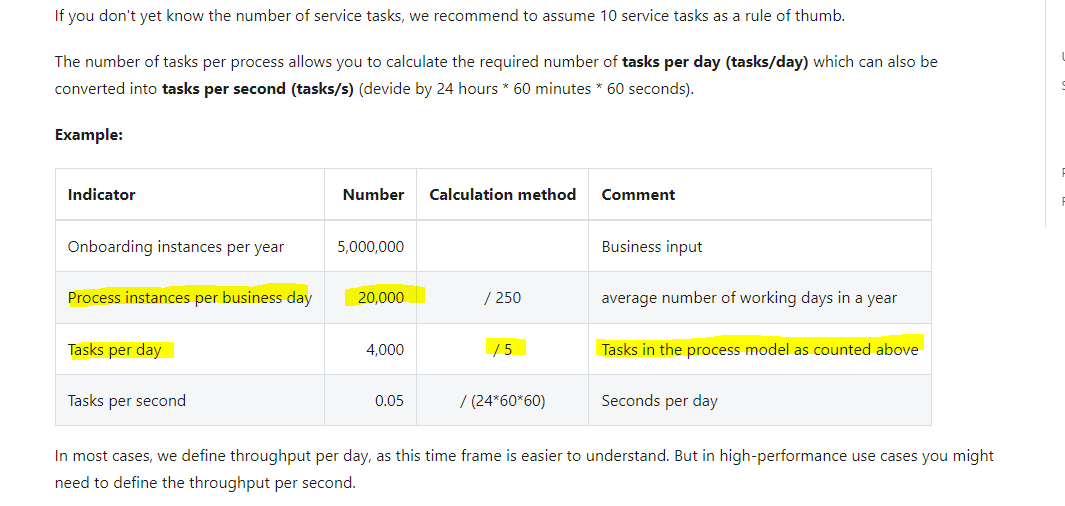

Also I tried to read the sizing documentation as well, and I’m a bit confused by this table, isn’t the computation off a bit?

Why was the 20,000 PIs per day divided by 5? Shouldn’t it be multiplied since each PI was assumed to have 5 tasks each?

Thanks!!!

*cloud sizing documentation

Hi @edcapulong,

please don’t mix sizing for Camunda Cloud with sizing for Camunda Platform. They use completely different technologies under the hood.

For Camunda Platform you can start some process instances of your process in an empty database. Then take the space that the data occupied in your database. Most databases have some metadata table where you can gather the used disk space.

Then do the math: Bytes / number of instances.

Hope this helps, Ingo

1 Like

Hi @Ingo_Richtsmeier

Thank you so much for the tip! Just a question, will it be safe to clear the ACT_GE_BYTEARRAY table? Since its getting big real fast since attachments are a part of our process model flow. Or is it needed by Camunda for some of its critical tasks?

The plan is once the blob file for the attachment is written in the bytearray table, we will take it out and write on an object store and immediately delete the record from the bytearray table of camunda.

Thanks!

Hi @edcapulong,

DON’T clear the ACT_GE_BYTEARRAY table, as it includes the process definitions and data for running process instances as well. Your process engine will stop working.

For load measuring start with a completely empty database.

Hope this helps, Ingo

1 Like

Hi @Ingo_Richtsmeier

Thank you for the advise, just wanted to check if there are any best practices in dealing with the ACT_GE_BYTEARRAY table since it can grow really fast especially if you have attachments as part of the process since it stores attachments as BLOB in this table right?

Also are there any open source tools or tools that you use for measuring performance?

Thank you!

Hi @edcapulong,

the best way to reduce the size of this table is to reduce the amount of data that your process creates.

Which data do you really need after the process instance is completed? How long do you need the data in Cockpit? Do you really need to save the attachment as process variables or isn’t there a better system to save them?

Most flexible is to implement a custom history level like here: camunda-bpm-examples/process-engine-plugin/custom-history-level at master · camunda/camunda-bpm-examples · GitHub. You can control very fine grained, which data should be stored in the history.

I don’t know any performance measurement tools.

Hope this helps, Ingo

1 Like