I want to Split the DMNs according to DMN sizing limits. to achieve this i want to use MongoDB to act as the DMN engine

Hi,

I dont quite follow what you are asking - DMN is a rules execution concept, MongoDB is a NoSql distributed document store…Hence what problem are you really trying to solve?

regards

Rob

Hi Rob,

I wish to know if there will be a performance hit if I have 100K records in a single DMN.

What should be the split strategy or best practice?

Scenario Explained Below.

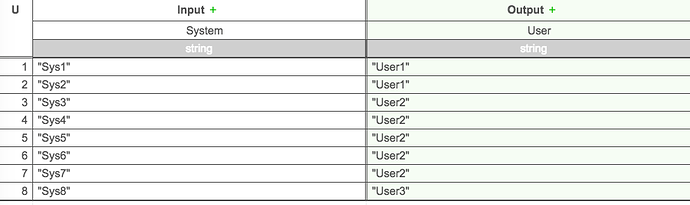

- In Scenerio2 as shown above, I have 100K records in the single DMN.

- The record towards the end of the table is the matching record.

Will there be a performance hit in this case during decision making?

Hi,

thankyou for your clarification…

I would suggest that DMN is best suited to human managed, readable rule sets. It looks like you have a very large lookup table. Whilst you could implement this in DMN, an alternate lookup approach may be a more elegant solution…

In terms of performance, yes a large table will likely incur near linear increase in compute time, however the DMN engine is quite quick. Theres nothing like a POC if you want some hadr numbers…

regards

Rob