Hello,

I want to use Kafka along with zeebe.

I have used docker-compose to start zeebe-operate(working fine) with nodejs and start kafka,connect,zookeeper,control center from this docker-compose that I found in the kafka-connect-zeebe project.

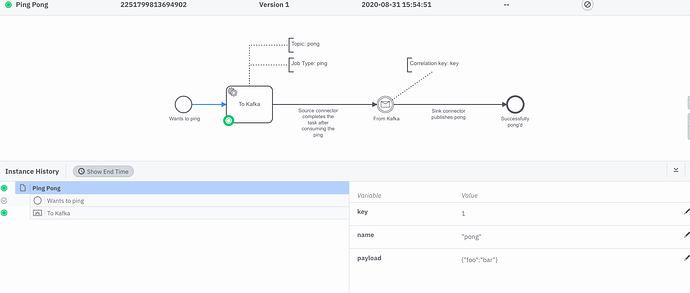

When following the ping-pong example I

- built the connector and moved the jar to the target folder

- deployed the workflow and created an instance, that was stuck in the first To Kafka step

- started source/sink according to documentation, but sink has always status degraded.

Does anyone know why it is not proceeding and why sink is not working?

Am I missing some configuration maybe, or have I forgot something?

Thanks a lot.

I would like to suggest you please check this https://zeebe.io/blog/2019/08/official-kafka-connector-for-zeebe/ this blog will helps you.

Thanks a lot for the answer.

As I said above I followed the example that is showed in this article and I faced the problems mentioned above.

The screen is not visible for me?

Hi @giorgosnty, I’ve the same issue.

According my research, the error is related with the Kafka sink connector when it try to parse the message. You can verify this with the command: docker logs > -f

In my case, I could see this error message:

[2020-09-27 15:26:07,275] DEBUG Failed to parse record as JSON: SinkRecord{kafkaOffset=0, timestampType=CreateTime} ConnectRecord{topic=‘pong’, kafkaPartition=0, key=2251799813686055, keySchema=null, value={“key”:2251799813686055,“type”:“ping”,“customHeaders”:{“topic”:“pong”},“workflowInstanceKey”:2251799813686050,“bpmnProcessId”:“ping-pong”,“workflowDefinitionVersion”:1,“workflowKey”:2251799813686047,“elementId”:“ServiceTask_1sx6rbe”,“elementInstanceKey”:2251799813686054,“worker”:“kafka-connector”,“retries”:3,“deadline”:1601161551014,“variables”:"{}",“variablesAsMap”:{}}, valueSchema=null, timestamp=1601161546394, headers=ConnectHeaders(headers=)} (io.zeebe.kafka.connect.sink.message.JsonRecordParser)

com.jayway.jsonpath.PathNotFoundException: No results for path: $[‘variablesAsMap’][‘key’]

I tried to change the sink.json configuration file using different key/value converters parameters but I haven’t good results yet.

Hope this help you.

Hi @giorgosnty, me again, this time I’ve good news.

The last two modifications I made were:

- Add these lines to the sink.json config file:

“errors.tolerance”: “all”,

“errors.deadletterqueue.topic.name”:“deadletterqueue”,

“errors.deadletterqueue.topic.replication.factor”: 1,

- Restart the containers using docker-compose down and then docker-compose up

I don’t know which of the steps made it work, but I think it was the second one.

Now It works fine and there is no error messages on kafka-connect container.

Regards!