Hello, I have a use case where I would like to complete several hundred external tasks in bulk.

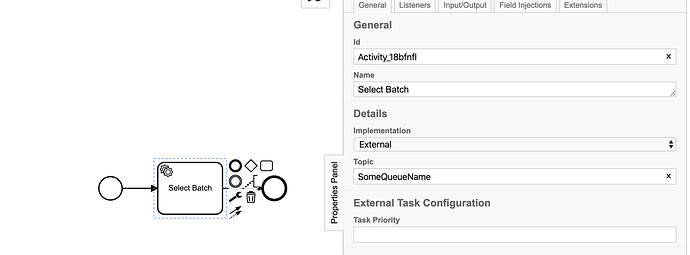

We will have multiple processes running in parallel (on the scale of thousands), and the current plan is to have these process reach an External Task that will place them into some queue (topic). A user will poll the task queue and look for ready-to-go items, then select a subset from the list of available items, and complete a batch of them all at once.

I can’t find an API that allows completing tasks in bulk, and completing 1000+ external tasks via API call is not viable. Is there a better BPMN construct for this? The External Task seemed appropriate because we could provide a topic in the definition, and easily query for tasks on that topic. Also the functionality around locking, and failing external tasks would be very convenient.

This feels natural but doesn’t allow for completing the tasks in bulk, in the same transaction.

Tasks are placed into SomeQueueName, and the functionality of External Tasks is almost exactly what we need.

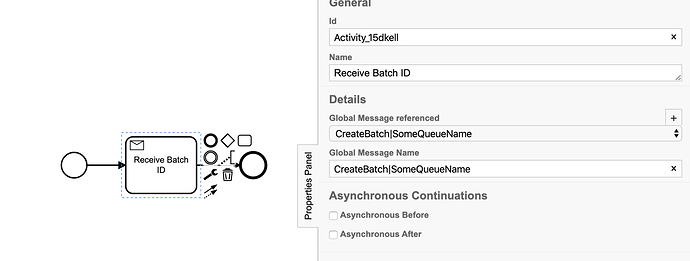

The User and Manual Task implementations seem to suffer from this same issue where they cannot be completed in bulk, and the only construct I can find for advancing multiple process instances in bulk seems to be signals (not appropriate for this use case) or message receiving events.

If using a message implementation, how can the can we query for items in the queue? There seems to be a process engine API for querying for message subscriptions, which could possibly work, but I can’t find a REST API for this sort of query. Ideally we could be able to query the queue without abusing other fields on the task like the definition key, name, or description.

Here we have a couple of hacks:

- setting the message name to something specific to both the message type and the name of the fake topic

- correlating a message to multiple processes is possible, but it seems to be a place where Camunda breaks with the intended usage of the BPMN element where a message should correlate to exactly one process instance

We could also set the name of the fake topic in the name or taskDefinitionId fields, but these also feel like hacks. The name is a display field, it’s not ideal to put process configuration properties in the name. Putting the name inside the taskDefinitionId also feels like a similar hack. I can’t think of a clean out-of-the box mechanism to allow us to query the queue without abusing these fields.

It would be a huge detriment to our work if we were forced to manage this sort of queue in an external system to basically replicate the functionality of the external task queue plus bulk completion.

Am I missing something obvious here?