Hi!

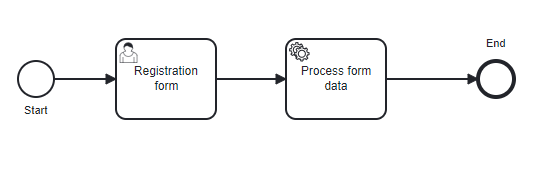

We are trying build a frontend flow with Camunda using user tasks. For simplicity I will use this very basic process as an example:

A user would start a new instance of this process from our frontend application. We then use the process instance key with the /flownode-instances/search endpoint in Operate API to retrieve the next task in the process so we know which form to render.

The problem is that it takes a very long time before any tasks are returned from the Operate API, from 6 to 10 seconds. This means that our users would have to wait for a very long time when they start an instance before we can actually render the form, which is not a great user experience.

The Tasklist GraphQL API seems to have a similar delay when querying for tasks.

I have also noticed the same thing with service tasks. While our worker receives the job almost instantly, it still takes many seconds before anything is returned from the Operate API.

Is this expected behavior? Is there a faster way to retrieve current tasks for an instance?

Thanks!

1 Like

Hey @Brynvald

sorry to hear that you facing these issues. We are aware that we have currently some performance issues with showing data in time within Operate and other web apps.

The issue here is that the web-apps are decoupled from zeebe, meaning Zeebe needs to export the data to elastic search, operate will pick the data up, index it (does some aggregation), store the data back to elastic and afterward it can show the data. Here we found some potential things to improve and working on it as far as I know. But maybe @aleksander-dytko can shade some more lights into this

Greets

Chris

2 Likes

Hi @Brynvald,

We’re aware of this issue and currently we are working to provide steady import time to Operate’s Elasticsearch, even with very high load.

As @Zelldon described, considering all the way the data must pass before arriving in Operate and Tasklist UI, I would say several seconds is more or less expected.

To give some additional info, when the system is idle, we have some back offs in our import (2 seconds) which can sum up with all other delays. Plus Elastic has refresh interval of 1 second (interval after which the data becomes visible for reads) and it’s applies at least twice in our case: 1st for Zeebe export indices and 2nd for Operate indices.

Cheers,

Alex

Thanks for the explanation, makes sense. We will try to find a way to work around this.

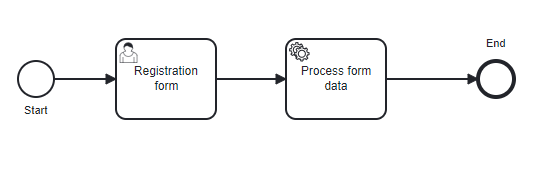

@Brynvald in our Camunda 8 library for Apex Designer, we have an option on the method that starts a process instance that waits for a task to be available to the user. After calling the gRPC API to start the instance, we poll Elasticsearch until the task arrives and then redirect the user to the task. You can see a diagram of the flow here. It usually takes a couple of seconds for the user to be redirected. There is a delay setting that you can configure on the Elasticsearch exporter that may reduce that part of the latency.

At some point, we would like to explore replacing the Elasticsearch exporter for an evolution of the EventStoreDB exporter. We would like to see if the new gRPC APIs of EventStoreDB would allow us to eliminate the batching in the exporter reduce the latency between Zeebe and EventStoreDB. We could also then subscribe to EventStoreDB using long polling so that we could eliminate most of the latency generated by polling Elasticsearch.

I hope those ideas help you in your analysis.

1 Like

Very interesting. Thanks for the input guys, much appreciated

Any update or solutions for this issue in 2023?