We have a single project that sometimes get some process definitions deployed twice, with same version number. It happened with differents process definitions already, and even deploying many changed bpmns in the same commit, always duplicated only one of them in a new camunda deployment.

Actually we are running camunda springboot in kubernetes, with 2 containers, using a mysql 5.7 as database.

We have these properties set in the processes.xml:

<property name="isDeleteUponUndeploy">false</property>

<property name="isScanForProcessDefinitions">true</property>

<property name="isDeployChangedOnly">true</property>

Springboot 2.0.5.RELEASE

Camunda springboot starter 3.0.0

Camunda 7.9.0

Mysql connector 8.0.11

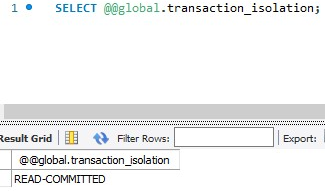

Already checked my global transaction level and its set to READ-COMMITED

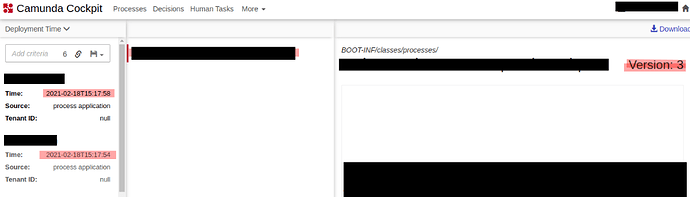

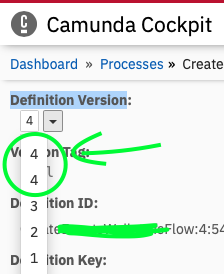

When this happens, we can see two deploys in cockpit, with only a few seconds (sometimes miliseconds) between them, and the last one has only the duplicated resource:

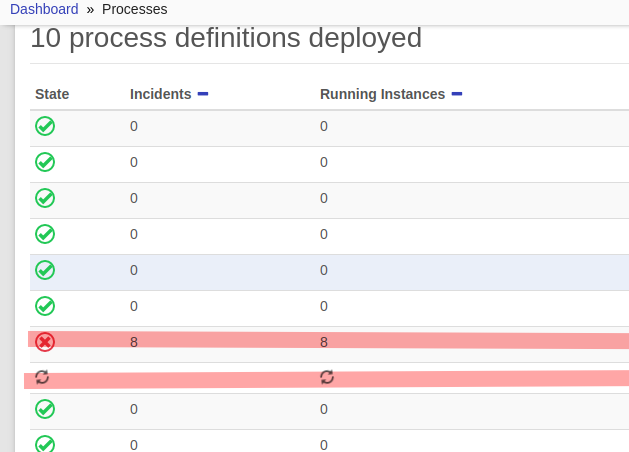

In the process definition list, in cockpit, one of them appears ok but the other one has a refreshing icon forever, both of them with the same name:

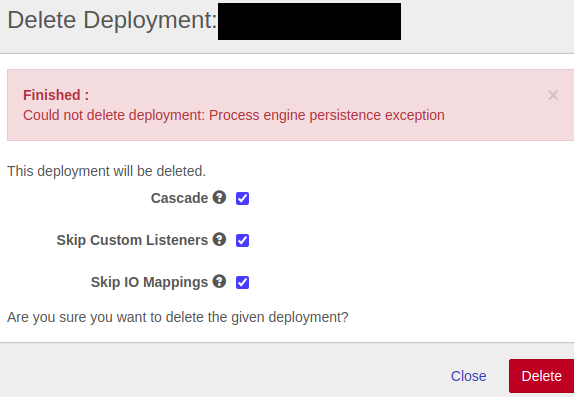

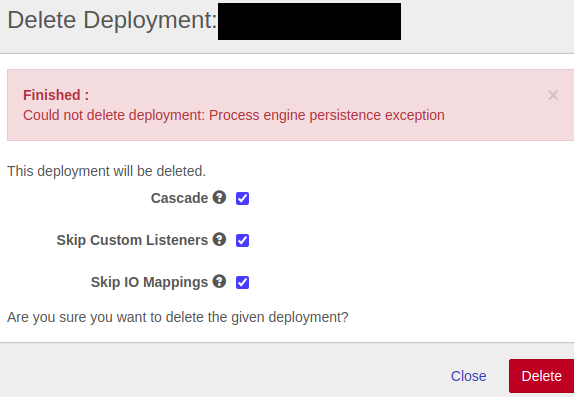

If we try to delete the deployment, it fails:

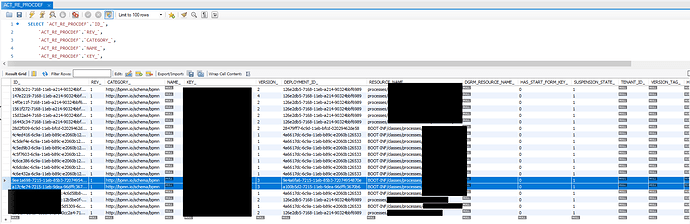

And i can see the duplicated row in database, with different ID_ but with the same KEY_,VERSION_ and RESOURCE_NAME_:

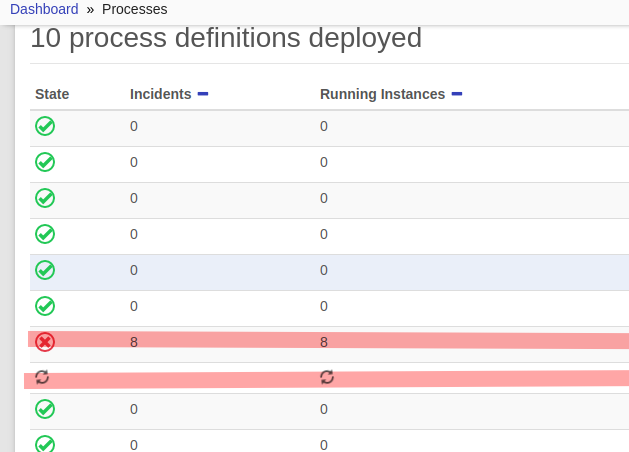

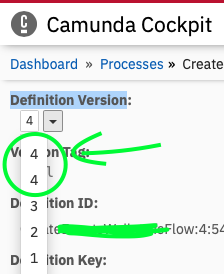

Hey there @Jean_Robert_Alves, were you able to fix this? We are facing the same problem here and struggling to find the reason e how to fix the problem. This is how our cockpit look like:

And with that we are not able to run a new deploy, delete the deployment via API or Cockpit and the app cockpit just stopped working as it should.

@marcelobessa at the time we had this problem, i had to acess the database and delete one of the two duplicated rows in the ACT_RE_PROCDEF table. Just do select sorting by KEY_ and VERSION_ and look for two rows with same key and version 4 (as your screenshot) and delete one of them. In my scenario, there was more than one process definition that was duplicated, so I had to do this same steps and delete each one of them til theres only a single row of every key and version combination.

I guess this problem can happen when theres something wrong with the transaction isolation level and you have more than one camunda starting together (k8s pods?!)

The same thing here. We also added the following atributes to try to avoid this from happening again:

camunda.bpm.generic-properties.properties.deployChangedOnly=true

camunda.bpm.auto-deployment-enabled=true(this for only one instance of our deployment and the others are set to false)

Does anyone have a better long term solution to this problem? I still see this issue happening occasionally.

@Dalton_Delpiano for us, we stoped this error from happening creating a unique key on database, so it gives error if two pods try to deploy the same version

ALTER TABLE IF EXISTS public.act_re_procdef

ADD CONSTRAINT unique_key_version_tenant UNIQUE (key_, version_, tenant_id_);

thanks for the reply.

@Jean_Robert_Alves do you know why this solution isn’t built into the Camunda product itself?