Hi Team,

As we are in the process of exploring Camunda Helm for Self managed, we need to understand how the helm “upgrade” really works. While upgrading we see the Camunda applications (Tasklist, Operate. Optimize) create a new separate pod and upgrade that. Once its completed the running pods in old version gets switched to the upgraded one.

How will the Zeebe upgrade happen here. Setup details are as below:

We have set the zeebe cluster with this properties,

replicas: 3

ZEEBE_BROKER_CLUSTER_PARTITIONSCOUNT: “3”

ZEEBE_BROKER_CLUSTER_CLUSTERSIZE: “3”

ZEEBE_BROKER_CLUSTER_REPLICATIONFACTOR: “3”

And here we have 3 Zeebe pods

camunda-zeebe-0

camunda-zeebe-1

camunda-zeebe-2

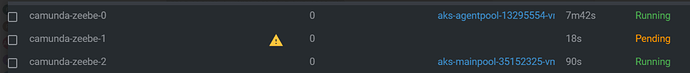

During upgrading of zeebe its upgrading pod by pod. During the upgradation its state goes to pending state (as below).

Couple of queries in this:

- There is no new pods created here for Zeebe. So while a upgrade happens for a broker, what happens to the Leader partition within this broker.

- As part of upgrade a restart occurs. Will there be execution of RAFT algorithm here and pick a new leader from the other 2 followers?

- During the upgradation (1 broker is in Pending State) only 2 brokers will be active. Out of them, if any broker goes down won’t that block the workflow execution completely.

- How are the partitions distributed within the brokers.

Is there any best practices we need to follow while thinking of helm upgrade in production.

Thanks,

Saju

Hi Team,

Any support/guidance is highly appreciated.

Thanks,

Saju

Hi Team,

Please provide some insights on this issue. Should this be any sort of limitation on Zeebe part which could cause a possible downtime?

Thanks&Regards,

Saju

Hi @Saju_John_Sebastian1 - great, and complex, questions! I’m not an expert in this area, so I asked our engineers and these are the answers I got back. Let me know what additional questions you might still have!

There is no new pods created here for Zeebe. So while a upgrade happens for a broker, what happens to the Leader partition within this broker.

New pods are created if the Zeebe image has changed. Sometimes anti-affinity can cause the pods to get stuck in a “Pending” state. While the upgrade happens, it’s similar to if a broker went offline.

As part of upgrade a restart occurs. Will there be execution of RAFT algorithm here and pick a new leader from the other 2 followers?

I assume the answer to this is yes, because the upgrade process is similar to a broker going offline.

During the upgradation (1 broker is in Pending State) only 2 brokers will be active. Out of them, if any broker goes down won’t that block the workflow execution completely.

It will likely affect workflow execution, yes, if you have an outage during an upgrade. As with any software, upgrades should be planned for and announced with possible downtime.

How are the partitions distributed within the brokers.

You can learn more about clustering here, and you can check the status of the brokers and partitions with zbctl (zbctl status), or with the gRPC Topology call.