How to enable history clean in camunda spring boot application when the machine is shutdown?

Hello @AmberYoung ,

after the server is started again, you can start the cleanup with this call:

My assumption is here that each process has a history time to live set.

If the server does not start at all, you could manually delete entries from tables with the act_hi_ prefix. They have no constraints or foreign keys towards each other, so deleting data should be straight forward. Here, you can order by the removal_time_ column to delete oldest entries first. If this column is null for a row, this means the instance is still running or there is no history time to live defined. In this case, I would strongly recommend to define this for each process (on process level of the BPMN file) and deploy all of them again. All started instance from this point will be cleanable.

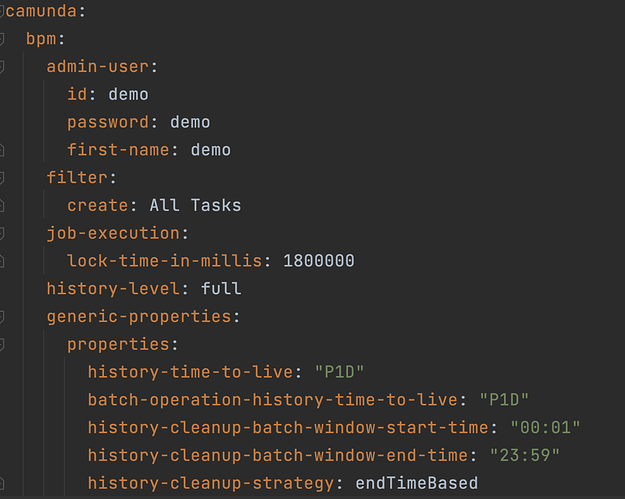

If you want to schedule the cleanup from inside the engine (for an ongoing cleanup), you can do this by using generic properties in spring boot:

Here, you can define the cleanup window:

There should only be 2 parameters required:

historyCleanupBatchWindowStartTime

historyCleanupBatchWindowEndTime

I hope this helps

Jonathan

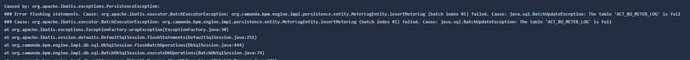

Hi @jonathan.lukas , my server can’t be started due to several camunda tables are full, including “ACT_GE_BYTEARRAY”, “ACT_RU_METER_LOG”, “ACT_HI_JOB_LOG” and so on.

As you said, I can manually delete entries from tables with the act_hi_ prefix, but how could I manually clean tables with the act_ge_ and act_ru_ prefix?

Hello @AmberYoung ,

the ACT_RU_METER_LOG can be cleaned/truncated as long as you do not have an enterprise license. If you have, consider only removing entries older than your last measure.

The ACT_GE_BYTEARRAY is something else and might be worth looking at. It contains all binary data, including files, stack traces, complex process variables.

As the rows are referenced from different places, it might be worth removing entries with a passed REMOVAL_TIME_ timestamp. Then, please have a look at the foreign keys the table has and other tables have to it. Applying those will help you to identify “orphaned” entries that can be removed right now. When history cleanup is configured properly, this should not happen anymore.

Other tables with ACT_GE_, ACT_RE_ or ACT_RU_ prefix should be left out as they are responsible for runtime operations.

Anyway, you will see that the history tables (and ACT_GE_BYTEARRAY) are mainly responsible for the huge amount of data.

Jonathan

Hi @jonathan.lukas, I restarted my server after cleaning some history data, and thank you for your answers, and I still have some details want to check with you.

-

I want to schedule the cleanup from inside the engine, and I make these configurations, are they enough?

1.1

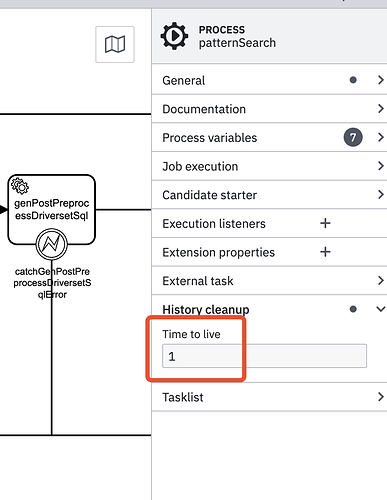

1.2 define history time to live on BPMN file

-

With the configurations above, can I use the POST rest api

/history/cleanup?

Hello @AmberYoung ,

- This configuration would work, however, the cleanup window is defined very wide. I would use a timeslot where the engine does not have too much runtime load.

- This configuration is exactly what you need. Please keep in mind that only process instances started in this version of the process definition will be cleaned up.

Jonathan

Thanks @jonathan.lukas , quite helpful.

I’m wondering whether history-time-to-live of the configuration and history-time-to-live of the BPMN file are the same meaning, and is it ok if I only keep history-time-to-live of the BPMN file?

Hello @AmberYoung ,

I would recommend to have both configurations in place.

As long as the process definition does contain a TTL, this will be applied. But if a process definition does not have this configuration, the TTL from the engine will be applied as a “fallback” which will prevent history entries without removal time.

Jonathan