Hey guys.

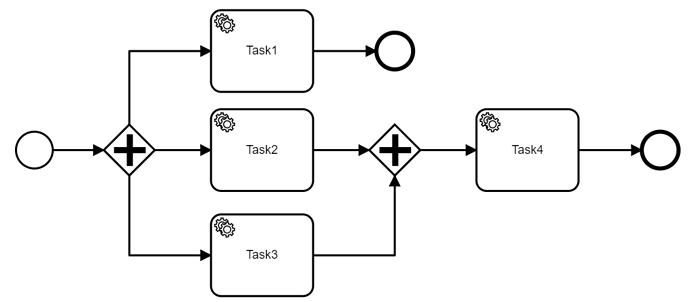

I currently want to implement a simple demo which sends a message via Kafka and waits for an response message on Kafka before to move on. As we do not yet have receiving message capabilities in the broker I thought to simply use a task handler to send the message and then do nothing. The response message will trigger the completion of the task later.

In code I do something like this:

@ZeebeTaskListener(taskType = "payment")

public void handleTaskA(final TasksClient client, final TaskEvent task) {

// send message via Kafka (or basically Spring Cloud Streams, could also be Rabbit or sth similar)

messageSender.send( //

new Message<RetrievePaymentCommandPayload>(...));

// do NOTHING, so the workflow instance keeps waiting there

}

Now we can listen on the messages on Kafka, with Spring CLoud Streams e.g.:

@StreamListener(target = Sink.INPUT)

public void messageReceived(String messageJson) {

Message<PaymentReceivedEvent> message = new ObjectMapper().readValue(messageJson, new TypeReference<Message<PaymentReceivedEvent>>(){});

PaymentReceivedEvent evt = message.getPayload();

// THIS IS NOT POSSIBLE:

zeebeClients.tasks().complete(evt.getTaskId());

I need to have a TaskEvent in order to complete the task. Why is this? Is there a reason that prevents completing the task with some id only? Or probably a combination of 2 or informations?

Is there any way to reconstruct this myself. What is necessary to set on this event and what is not needed?

Or would it be doable to stringify the event and reconstruct it from there?

Happy to take any thoughts - as this is currently the only way I see to implement waiting for any event (message, timer, …) from the outside.

Thanks

Bernd

Thanks for the proposal - let me comment on some key points:

Thanks for the proposal - let me comment on some key points: