Dear all, please could you help me.

Problem description

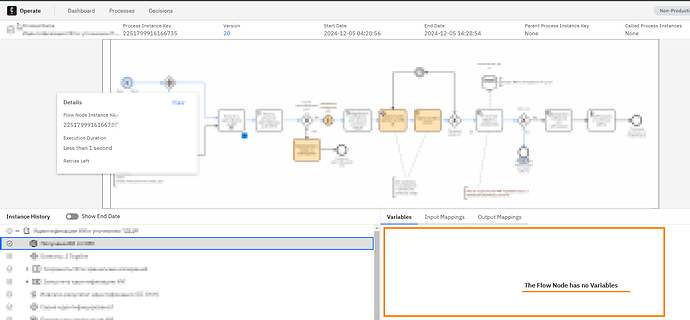

- I’ve got an issue related to Operate : after certain time it stops

displaying process variables.

Situation - I use camunda 8.5

- Zeebe Exports to Elastic

- Zeebe exporter works fine.

- The only error i see in logs is error related to elastic

Question - May be someone knows how to fix this issue ?

** Screen**

Zeebe config

zeebe: # Docker | Camunda 8 Docs

image: camunda/zeebe:${CAMUNDA_PLATFORM_VERSION}

container_name: zeebe

ports:

- “26500:26500”

- “9600:9600”

- “8088:8080”

environment: # Environment variables | Camunda 8 Docs

- ZEEBE_BROKER_EXPORTERS_ELASTICSEARCH_CLASSNAME=io.camunda.zeebe.exporter.ElasticsearchExporter

- ZEEBE_BROKER_EXPORTERS_ELASTICSEARCH_ARGS_URL=http://elasticsearch:9200

# Export “zeebe-record” metrics

- ZEEBE_BROKER_EXECUTION_METRICS_EXPORTER_ENABLED=true

# This environment variable sets the delay for the Elasticsearch exporter in Zeebe.

# This configuration affects how quickly data is exported from Zeebe to Elasticsearch

- ZEEBE_BROKER_EXPORTERS_ELASTICSEARCH_ARGS_BULK_DELAY=60

# This environment variable sets the batch size for the Elasticsearch exporter in Zeebe to N records.

# This means that when the exporter has collected N records, it will send them as a bulk request to Elasticsearch.

- ZEEBE_BROKER_EXPORTERS_ELASTICSEARCH_ARGS_BULK_SIZE=100

# This environment variable sets the memory limit (in bytes) for the Elasticsearch exporter

- ZEEBE_BROKER_EXPORTERS_ELASTICSEARCH_ARGS_BULK_MEMORYLIMIT=52428800

# This environment variable specifies the minimum amount of free disk space required for the broker to continue processing commands. When the available free space falls below this threshold, the broker will:

# 1) Reject all client commands

# 2) Pause processing

- ZEEBE_BROKER_DATA_DISK_FREESPACE_PROCESSING=2GB

# This environment variable sets a threshold for free disk space that controls replication behavior in Zeebe brokers. According to the Camunda documentation, when the available free disk space falls below this value

# 1) The broker stops receiving replicated events.

# 2) This helps prevent the broker from running out of disk space due to incoming replicated data.

- ZEEBE_BROKER_DATA_DISK_FREESPACE_REPLICATION=1GB

# Automatically clean up the “zeebe-record_variable” index for Elasticsearch

- ZEEBE_BROKER_EXPORTERS_ELASTICSEARCH_ARGS_RETENTION_ENABLED=true

- ZEEBE_BROKER_EXPORTERS_ELASTICSEARCH_ARGS_RETENTION_MINIMUMAGE=8h

#This environment variable sets the maximum number of commands that are processed within one batch.

- ZEEBE_BROKER_PROCESSING_MAXCOMMANDSINBATCH=10

#This environment variable sets the maximum size of the incoming and outgoing messages for zeebe brokert.

- ZEEBE_BROKER_NETWORK_MAXMESSAGESIZE=20MB

# This environment variable sets the maximum size of the incoming and outgoing messages for zeebe gateway.

- ZEEBE_GATEWAY_NETWORK_MAXMESSAGESIZE=20MB

- “JAVA_TOOL_OPTIONS=-XX:MaxRAMPercentage=50.0 -XX:InitialRAMPercentage=25.0 -XX:+ExitOnOutOfMemoryError -XX:+HeapDumpOnOutOfMemoryError”

restart: unless-stopped

healthcheck:

test: [ “CMD-SHELL”, “timeout 10s bash -c ‘:> /dev/tcp/127.0.0.1/9600’ || exit 1” ]

interval: 30s

timeout: 5s

retries: 5

start_period: 30s

volumes:

- zeebe:/usr/local/zeebe/data

networks:

- camunda-platform

depends_on:

- elasticsearch

Error from elastic

2024-12-05 11:32:54.797 ERROR 7 — [ import_2] i.c.o.u.ElasticsearchUtil : INDEX failed for type [operate-list-view-8.3.0_] and id [2251799820095593-ClaimResponse]: ElasticsearchException[Elasticsearch exception [type=document_parsing_exception, reason=[1:1799553] failed to parse: [routing] is missing for join field [joinRelation]]]; nested: ElasticsearchException[Elasticsearch exception [type=illegal_argument_exception, reason=[routing] is missing for join field [joinRelation]]];