Dear @camunda Team & Community,

i am working in a project, that uses zeebe in multiple services (around 10 services). The engine is self-hosted. We have a (rancher-based) docker-compose setup. In the project, each service is a kotlin-service, running with spring-boot on the JVM. We’ve recently updated all these services from camunda 8.7 to camunda 8.8.

Due to the Deprecation of the java & spring-libraries (even if they are still active until 8.10) we’ve also migrated the base- & testing-library. Until then we’ve used zeebe-process-test with the in-memory-engine. From this library we’ve switched to camunda-process-test - using test-containers.

My problem is:

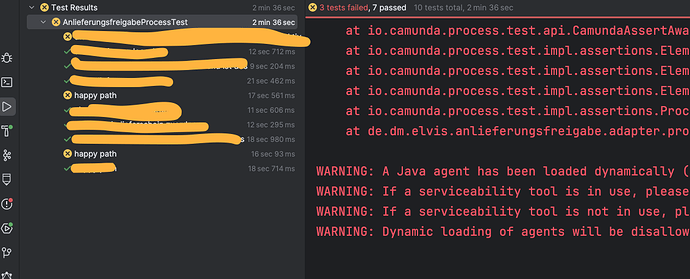

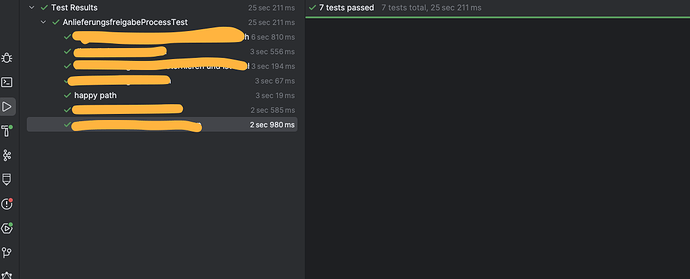

This migration has lead to significant change in test- & pipeline duration. When before our whole testing-suite took 1-2 minutes to complete it now takes up to 5+ minutes.

This in turn has lead to a very noticeable dissatisfaction (partly frustration) of the developers with regard to developer experience—and, all in all, reduces productivity in the project. It also impairs our ability to for instance roll out fixes quickly when problems arise, since builds also take longer. And i don’t know yet how to deal with it.

To come to my question(s):

In your blog post that introduces the library you are promising “faster test execution, simpler environment setup, and smoother integration into modern CI/CD workflows” by “leveraging technologies like TestContainers”. At the moment i can’t notice this - since:

- Test- & Build-Durations did rise significantly (well, its a test-container, so i would have been very impressed, if this would be faster

)

) - Complexity has increased, since Pipelines (in our case GitLab) require docker-in-docker setup to ensure that the tests are running in there

Therefore i wanted to ask multiple things:

- What kind of strategy do you propose for testing in 8.8 (integration tests that also test the workers? just testing the definition and mocking ALL workers and co? We are currently doing the first - to ensure the whole process is working as expected, as workers for instance write variables into the process. and mocking them would undermine the production-code as the single-source-of-truth)

- How can we tune the performance of our tests (did we do something significantly wrong when setting up this tests? e.g. reducing timeouts/accelarating start of testcontainer, reuse container, etc.)

- Are you planing to keep

testcontainersthe default or a you considering to enhance the library again with an in-memory-engine?

Does anyone in the community share this issues? If yes, i appreciate every answer/hint on how you tacke this problem ![]()

What could help me besides that would be more best-practices on performance-tuning of the tests. I couldn’t find much more than this section in your docs, explaining how to setup / migrate the tests. So if i missed out any of these, i would also appreciate linking them ![]()

I have build on example on GitHub representing how a process-test in this project is basically built up: Example of a process-test in 8.8

Thank you in advance for any answer ![]()

Marco