Hi team,

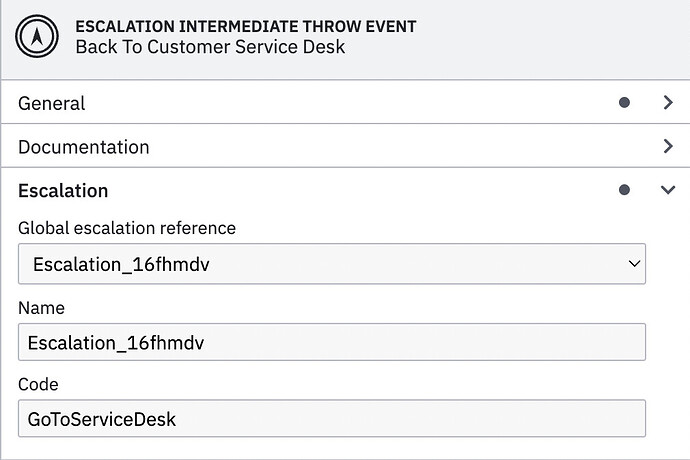

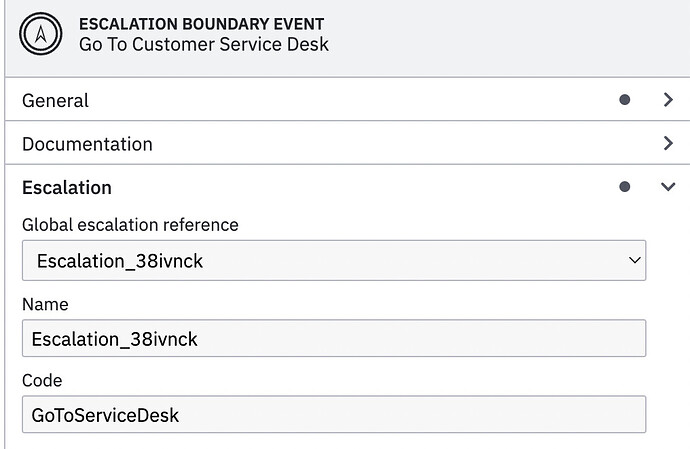

Escalation catch event

Similarly for other events like Link intermediate throw and catch

Hi,

In the current stable version of Camunda 8 (8.1) Escalation events are not supported. However, they will be supported in the next release, Camunda 8.2 (to be released in April). Once they are supported, you can configure escalation events as you know it from Camunda 7.Release Camunda Platform 8.2.0-alpha4 · camunda/camunda-platform · GitHub

1 Like

lzgabel

February 17, 2023, 10:04am

3

Similarly for other events like Link intermediate throw and catch

@Naveen_KS . 8.2.0-alpha2 and later, for more details, please see here: 8.2.0-alpha2

1 Like

Hi @StephanHaarmann , @lzgabel , Are there any alternatives which I can use now in Camunda 8.1 version.

Hi @Naveen_KS ,

1 Like

Thanks @StephanHaarmann , I’m doing POC to test out the functionalities before migrating, I’ll test with 8.2 alpha release for now. Thanks for your suggestions!

Hi @StephanHaarmann , Is Signal events are also part of 8.2 release?

Hi,

opened 02:03PM - 20 Oct 22 UTC

kind/epic

component/engine

## Introduction

In process automation, sometimes there is a need to broadcast… a signal to one or multiple waiting process instances or processes.

> A BPMN Signal is similar to a signal flare that [is] shot into the sky for anyone who might be interested to notice and then react. Thus, there is a source of the Signal, but no specific intended target.

>-[BPMN 2.0.2 - 10.5.4 Intermediate Event](https://www.omg.org/spec/BPMN/2.0.2/PDF#10.5.4%20Intermediate%20Event)

>Signals [...] are typically used for broadcast communication within and across Processes, across Pools, and between Process diagrams.

>-[BPMN 2.0.2 - 10.5.1 Concepts](https://www.omg.org/spec/BPMN/2.0.2/PDF#10.5.1%20Concepts)

As a user, I can:

- deploy all Signal Events to the Engine (Zeebe).

- be sure that the Engine uses signal events correctly, e.g.

- triggers signals when the token arrives at throwing signal events

- and broadcast to all catching signal events (including Start Event)

- broadcast a signal via the gRPC API

## Types of Signal Events

<img width="692" alt="Table 10.93 - Types of Signal Events and their Markers (cropped)" title="Table 10.93 - Types of Signal Events and their Markers (cropped)" src="https://user-images.githubusercontent.com/3511026/196949501-8ce4b8da-ae64-440a-a5ba-aaaffad44e84.png">

<details>

<summary><h3>Specification of Signal Event Types</h3></summary>

<p>

The specification defines the following types of Signal Events and their behaviors:

#### Top-Level Process Start Events

<img width="687" alt="Table 10.84 – Top-Level Process Start Event Types (cropped)" title="Table 10.84 – Top-Level Process Start Event Types (cropped)" src="https://user-images.githubusercontent.com/3511026/196949757-c9397587-2968-45d9-8127-860210621a0d.png">

#### Event Sub-Process Start Events

<img width="694" alt="Table 10.86 – Event Sub-Process Start Event Types (cropped)" title="Table 10.86 – Event Sub-Process Start Event Types (cropped)" src="https://user-images.githubusercontent.com/3511026/196951294-62481cd2-2560-4c11-92c1-3fe44d70a043.png">

#### Intermediate Events

<img width="694" alt="Table 10.89 – Intermediate Event Types in Normal Flow (cropped)" title="Table 10.89 – Intermediate Event Types in Normal Flow (cropped)" src="https://user-images.githubusercontent.com/3511026/196950488-c7e45f60-03a2-4893-82ab-dd25afcf9d0f.png">

#### Boundary Events

<img width="691" alt="Table 10.90 – Intermediate Event Types Attached to an Activity Boundary (cropped)" title="Table 10.90 – Intermediate Event Types Attached to an Activity Boundary (cropped)" src="https://user-images.githubusercontent.com/3511026/196951138-171d1b3f-66aa-4a45-96fc-06569430fed6.png">

#### End Events

<img width="696" alt="Table 10.88 – End Event Types (cropped)" title="Table 10.88 – End Event Types (cropped)" src="https://user-images.githubusercontent.com/3511026/196951075-a599054f-5860-4720-8300-813380e5e970.png">

</p>

</details>

## Concept

Signals are broadcasted to both processes (received by top-level signal start events) and process instances (received by all other signal catch events).

### Top-level start events

A signal is broadcasted to all processes, so it can be caught by a signal top-level start event, starting a new instance of that process when received.

In Zeebe, this should be straightforward to implement. The process instances can be created on the same partition that the signal is broadcasted from (i.e. the partition where the signal throw event is activated; or the partition that has received the signal broadcast request from the gateway). In other words, the signal top-level start events can catch the signal on the same partition. We have no need for inter-partition communication for this part of the signal logic.

> **Note**

> We only have to create an instance of the latest version of each of the deployed processes known by that partition at the moment that the signal is received.

### Other catch events

A signal is also broadcasted to all process instances, so it can be caught by any of the other signal catch events when received. Those process instances that are awaiting the signal can continue their execution once they receive the signal.

In Zeebe, a process instance exists only on one partition. So, a signal must be broadcasted to all the partitions that have process instances. We can simply broadcast it to all partitions. Each partition receives the signal and then continues all the process instances that are awaiting that signal. So we need inter-partition communication for this part of the signal logic.

### Signal logic overview

Here's a diagram describing both parts of the signal logic and the inter-partition communication.

<details><summary><h3>Alternative concept discussion</h3></summary>

<p>

The concept described above leads to two main performance challenges. Let's discuss these individually.

1. Each partition may have many process instances awaiting a signal. A broadcasted signal could produce lots of work for the engine on each partition. The amount of work is determined by the number of process instances awaiting that specific signal.

2. Each broadcasted signal requires communication between the partitions. This communication traffic increases linearly with the number of partitions.

There's not much we can do to reduce the impact of the first challenge because it depends directly on the number of process instances awaiting that specific signal. It's up to the user to decide how they design their processes and how many process instances are awaiting a signal. We should inform users about the performance impact that comes with the usage of signals. Over-usage must be avoided.

> **Note**

> Due to a technical limitation, there's an actual limit to the number of catch events that a signal can be caught by. At the time of writing, a processed command can only produce a limited number of follow-up records. This limitation is defined by `maxMessageSize`.

The impact of the second challenge can be reduced by communicating only with those partitions that have process instances awaiting the specific signal. We don't have to broadcast the signal to partitions that have no process instances. Likewise, we don't have to broadcast the signal to partitions that have process instances, but none of them use signals. Etc.

In order for a partition to know whether another partition has process instances awaiting a specific signal, some form of bookkeeping needs to take place on both partitions. This bookkeeping means inter-partition communication in the opposite direction.

When looking at it in a diagram, it quickly becomes clear that this defeats the entire purpose. Each signal now requires multiple communications. This only improves the situation when a few catch events receive a signal. Generally, we expect that each signal is received by many catch events.

To make matters worse, a signal subscription needs to be tracked, making the logic and implementation more complex. This subscription logic simply seems to go against the broadcasting semantics of signals. As a final nail in the coffin for this idea, the hashing of the signal name to partition id could be a problem when we want [to dynamically scale the number of partitions](https://github.com/camunda/zeebe/issues/4405). We greatly prefer the simpler solution proposed at the start.

</p>

</details>

## Task breakdown

The task breakdown has not yet been completed. You can find a work in progress here:

- https://github.com/camunda/zeebe/issues/10851

To summarize, the topic is split up into several stages.

- #11194

- to be determined

## Open questions

- should `signalName` only allowed to be a static value or can it also be a FEEL expression?

- should signal throw event raise an incident while stage 3 has not yet been implemented?

## Links

- [Product Hub Issue](https://github.com/camunda/product-hub/issues/142) (internal)

- [C7 Docs](https://docs.camunda.org/manual/latest/reference/bpmn20/events/signal-events/)

## Special thanks

- To @NPDeehan and @Nlea for their awesome help with the concept process model

- To @skayliu for preparing an overview of the technical task breakdown

A milestone is not set; thus, I assume that it may not be part of the 8.2 release.

lzgabel

February 22, 2023, 11:35am

9

1 Like

Thanks for the update much appreciated!! @lzgabel , @StephanHaarmann