Hi all,

We’re evaluating Camunda 8 for some of our workflows. I’ve setup a self-managed cluster in Kubernetes ( k3s ), and have setup a test cluster ( with 3 nodes ). K3s comes with Traefik, but I installed the Nginx Ingress Controller, since the Helm Charts assume nginx as an ingressClassName. Using the Camunda 8 Helm Charts I deploy everything ( see below for the values I use ).

The cluster works as predicted. All the components are operational. The setup creates two ingresses, as expected. A global ingress for tasklist, optimize, identity and operate, and another ingress for the Zeebe gateway.

Using K3d, I tell the cluster to create a loadbalancer for port 80, 9600 and 26500, assuming these will suffice.

# Chart values for the Camunda Platform 8 Helm chart in combined Ingress setup.

# This file deliberately contains only the values that differ from the defaults.

# For changes and documentation, use your favorite diff tool to compare it with:

# http://github.com/camunda/camunda-platform-helm/blob/5cbf47106c55a60d427c786cb9a946489bce5815/charts/camunda-platform

# IMPORTANT: Make sure to change "localhost" to your domain.

global:

ingress:

enabled: true

host: "localhost"

identity:

auth:

publicIssuerUrl: "http://localhost/auth/realms/camunda-platform"

operate:

redirectUrl: "http://localhost/operate"

tasklist:

redirectUrl: "http://localhost/tasklist"

optimize:

redirectUrl: "http://localhost/optimize"

webModeler:

redirectUrl: "http://localhost/modeler"

identity:

contextPath: "/identity"

fullURL: "http://localhost/identity"

operate:

contextPath: "/operate"

optimize:

contextPath: "/optimize"

tasklist:

contextPath: "/tasklist"

webModeler:

# The context path is used for the web application that will be accessed by users in the browser.

# In addition, a WebSocket endpoint will be exposed on "[contextPath]-ws", e.g. "/modeler-ws".

contextPath: "/modeler"

#zeebe:

# clusterSize: "1"

# partitionCount: "1"

# replicationFactor: "1"

zeebe-gateway:

replicas: 1

ingress:

enabled: true

host: "zeebe.localhost"

elasticsearch:

replicas: 1

#console:

# enabled: true

# ingress:

# enabled: true

# host: "console.localhost"

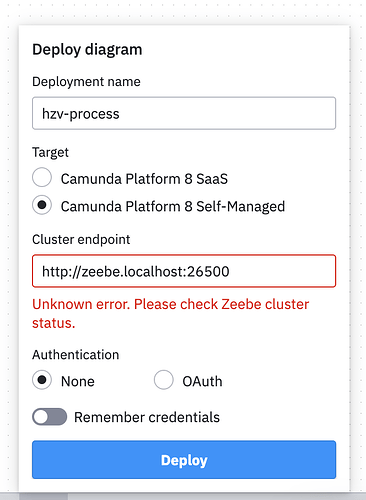

When I try to connect to the Zeebe Gateway with the Desktop Modeler, I get the following error.

I also setup a little example project using Kotlin and the Quarkus Zeebe Client.

...

final var client = ZeebeClient.newClientBuilder().gatewayAddress( "zeebe.localhost:26500" ).build();

@GET

@Path( "/start" )

fun start() {

client.newDeployResourceCommand().addResourceFromClasspath("flo-backend-business-signup-flow.bpmn" ).send().join()

}

...

Again, I am facing an issue with the connectivity.

Caused by: io.camunda.zeebe.client.api.command.ClientStatusException: Network closed for unknown reason

at io.camunda.zeebe.client.impl.ZeebeClientFutureImpl.transformExecutionException(ZeebeClientFutureImpl.java:93)

at io.camunda.zeebe.client.impl.ZeebeClientFutureImpl.join(ZeebeClientFutureImpl.java:50)

at io.quarkiverse.zeebe.runtime.ZeebeRecorder.init(ZeebeRecorder.java:79)

at io.quarkus.deployment.steps.ZeebeProcessor$runtimeInitConfiguration1713424349.deploy_0(Unknown Source)

at io.quarkus.deployment.steps.ZeebeProcessor$runtimeInitConfiguration1713424349.deploy(Unknown Source)

... 13 more

Caused by: java.util.concurrent.ExecutionException: io.grpc.StatusRuntimeException: UNAVAILABLE: Network closed for unknown reason

at java.base/java.util.concurrent.CompletableFuture.reportGet(CompletableFuture.java:396)

at java.base/java.util.concurrent.CompletableFuture.get(CompletableFuture.java:2073)

at io.camunda.zeebe.client.impl.ZeebeClientFutureImpl.join(ZeebeClientFutureImpl.java:48)

... 16 more

Caused by: io.grpc.StatusRuntimeException: UNAVAILABLE: Network closed for unknown reason

at io.grpc.Status.asRuntimeException(Status.java:539)

at io.grpc.stub.ClientCalls$StreamObserverToCallListenerAdapter.onClose(ClientCalls.java:491)

at io.grpc.internal.DelayedClientCall$DelayedListener$3.run(DelayedClientCall.java:489)

at io.grpc.internal.DelayedClientCall$DelayedListener.delayOrExecute(DelayedClientCall.java:453)

at io.grpc.internal.DelayedClientCall$DelayedListener.onClose(DelayedClientCall.java:486)

at io.grpc.internal.ClientCallImpl.closeObserver(ClientCallImpl.java:567)

at io.grpc.internal.ClientCallImpl.access$300(ClientCallImpl.java:71)

at io.grpc.internal.ClientCallImpl$ClientStreamListenerImpl$1StreamClosed.runInternal(ClientCallImpl.java:735)

at io.grpc.internal.ClientCallImpl$ClientStreamListenerImpl$1StreamClosed.runInContext(ClientCallImpl.java:716)

at io.grpc.internal.ContextRunnable.run(ContextRunnable.java:37)

at io.grpc.internal.SerializingExecutor.run(SerializingExecutor.java:133)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1136)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:635)

... 1 more

I see numerous topics on the forum of people having trouble to connect to the Zeebe gateway with Desktop Modeler, a Zeebe client or zbctl.

What am I missing?