We are investigating Camunda 8 again (we also did this almost two years ago when 8.0 was released), now that a RabbitMQ Producer Connector and RabbitMQ Inbound Connector have been added.

We rely heavily on RPC calls to initiate actions. Simply publishing a message isn’t enough since we need to wait until it is finished or handle failures.

This is based on RabbitMQ tutorial - Remote procedure call (RPC) — RabbitMQ

Prerequisite:

- Make sure there is a

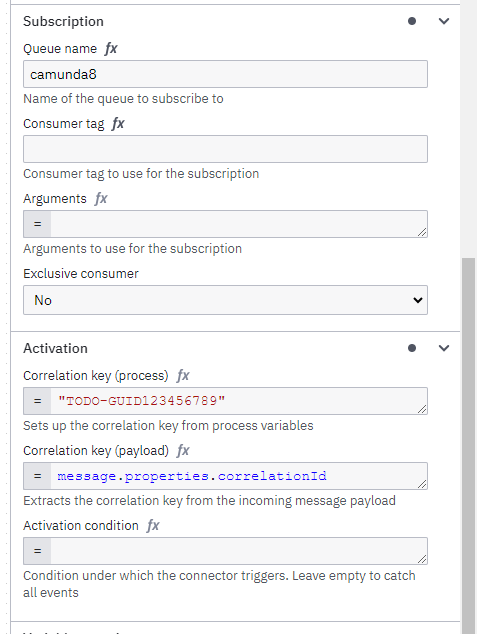

camunda8queue with a binding oncamunda.rpc.reply.

It roughly goes like this:

- Generate a GUID for the correlationId (still need to find out how we best do this)

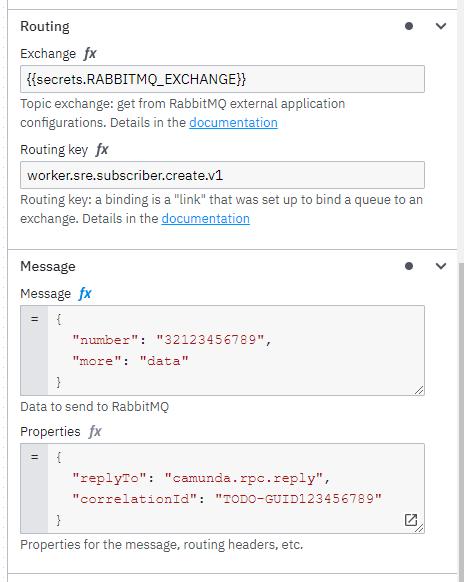

- Use the RabbitMQ Outbound Connector to send a message.

- The routing key is something like

worker.sre.subscriber.create.v1. - The body contains a JSON with information about the subscriber to create.

- The properties contains

{"replyTo": "camunda.rpc.reply", "correlationId": guid}

- The routing key is something like

- Another worker that is subscribed to the RabbitMQ bus is receiving messages with routing key

worker.sre.subscriber.create.v1and create the subscriber in the backend. When this is done (might take a while), it sends back a message to the routing key indicated by thereplyToproperty to indicate success or failure. The passed correlationId will be relayed in this reply. - The Camunda process listens for these messages and takes action based on the success or failure.

Configuration in the Modeller:

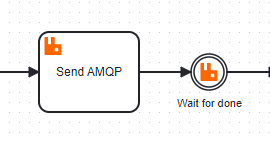

Send AMQP:

Wait for done:

This works, but it’s a bit cumbersome. Is there a better way handle this? There is also no FEEL function to generate a GUID unfortunately. (See Built-in function for UUID)

Ideally, there would be a RPC Task definition in the RabbitMQ Connector to combine these things:

- Generate a GUID for the correlationId

- Publish the message

- Listen for a reply on the specified queue and pick up a reply with the correct correlationId

- Communicate to zeebe to continue the process

I’ve also seen this warning on this page RabbitMQ Connector | Camunda 8 Docs :

To maintain stable behavior from the RabbitMQ Connector, do not subscribe multiple RabbitMQ Connectors to the same queue.

Successfully consumed messages are removed from the queue, even if they are not correlated.

Does this mean that this setup isn’t even supported? There might be other messages arriving on our single response queue for other processes…