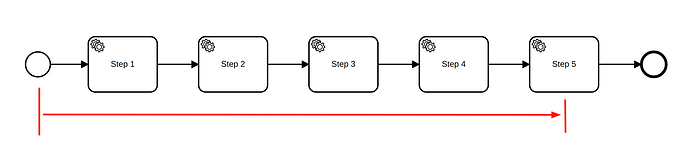

Kristof Jozsa: my goal is to tune a sample flow as shown here on the picture. It starts with a variable set to the current timestamp, has 4 no-op workers, and a 5th worker prints the time spent since the start.

Running camunda platform 8 on my local machine (8 cores, 32g ram) and the test itself too, it seems that out-of-the-box with the default configuration

• a single flow runs in ~350ms

• 5 concurrent flows (started in a loop on a single thread) run around ~1100ms/each

• 10 concurrent flows run around ~2100ms/each

• 20 concurrent flows blow up with RESOURCE_EXHAUSTED

What can I do on the server side and what on the client side, to tune to the fastest possible performance in these cases while staying at 100% stability?

Kristof Jozsa: @Josh Wulf @zell what do you guys think? What settings would have the biggest impact on such a scenario?

Kristof Jozsa: (and tell me if you need the sample project I quickly have put together for this test)

Josh Wulf: Max Jobs Active is how many jobs your worker will activate at a time. If you set it to 3, and there is only one job available when the worker polls, it will activate that job, and then poll for 2 more.

If you set it to max jobs active 1, then it asks for 1 job, and when it gets one, it will complete that job before polling for more.

You can tune the max jobs active and the polling interval to get the best performance. Also, decrease the number of workers if you have more than one.

More is not necessarily better when it comes to workers. Some latency to the job activation time can be better than constantly polling that saturates the brokers.

If max jobs active 1 doesn’t trigger RESOURCE_EXHAUSTED then it sounds like that is your number right there.

zell: > Running camunda platform 8 on my local machine

What does this mean? How does you setup look like exactly? Do you run one broker? Or multiple? Embedded Gateway or Standalone? How many partitions?

What is the goal of this test? Does this make sense to execute that against your local machine?

Kristof Jozsa: @Josh Wulf I do not understand how or why I get RESOURCE_EXHAUSTED at the server side at such a low parallel instance count like 20, and why I don’t get it if the client polls for Max Jobs Active 1

Kristof Jozsa: @zell it’s out of the box C8 at the moment ran using docker-compose-core.yaml in the camunda-platform git repo.

The goal of this test to understand the minimum overhead / maximum bottleneck what our clients can expect using the C8 platform, as well as giving us a chance to tune the parameters in a very simple, easily repeatable scenario.

Yes, it makes a lot of sense, as long as we are clear that the resources are split between the tested system and the testing system (hence potentially messing with the results) and that the whole system is quite short on resources in general (8core/32g ram is not much really these days).

Josh Wulf: > low parallel instance count like 20, and why I don’t get it if the client polls for Max Jobs Active 1

Do you mean Max Jobs Active 20 vs Max Jobs Active 1?

If so, it’s because the worker is polling for more jobs frequently. You could make sure that it is using long-polling. The problem may be that when the worker polls, if it gets back a job, but is not at its max, it will poll again immediately.

So if you can activate 1 job per second, and the job takes 3 seconds to complete, a max job active 1 worker will poll every 3 seconds. A worker of max job active 3 or more will poll every second. The constraint on the tempo is the server responding with a job to activate.

Kristof Jozsa: my experience is that starting 20 of these trivial workflows in a loop:

• Max Active Jobs == 1 works

• Max Active Jobs == 5 throws RESOURCE_EXHAUSTED

and this is completely reproducable

Kristof Jozsa: and given the low number of resources required to such a really basic test, I doubt this is actually a resource exhaustion scenario

Kristof Jozsa: https://github.com/kjozsa/c8-perftest/tree/main/src/main/java/hu/dpc/phee/perftest

Josh Wulf: It is a resource exhaustion scenario. Your workers are too eager wrt to the workload, and the broker is being saturated with their incessant requests for more work with the resources that it has.

You could try tuning the back pressure algorithm used, reducing max jobs, or increasing the broker resources - and I’d recommend timing the throughput and cycle time while you do that.

Josh Wulf: If you are interested in the science/black art of performance tuning Zeebe, check this out: https://camunda.com/blog/2020/11/zeebe-performance-tool/

Kristof Jozsa: @Josh Wulf I was just thinking about the default configuration you ship C8 with: it should probably be 100% stable, even on the price of some performance hit.

I’m not a complete newcomer here, but being able to blow up the default config with starting just 20 flows is a scary experience

Kristof Jozsa: it’s not like we’d want to scale up/out for 5k or 100k concurrent flows… it’s twenty flows ![]()

Josh Wulf: It’s not the flows. It is the workers - particularly the max jobs active setting. And the broker resources that you start it with.

So if you run it with max jobs active 1, it works better.

It may work better with max jobs active set to 5 with 2000 flows, or 20 flows / second.

This is a distributed streaming system, so its performance is a complex multi-variable equation.

Note: This post was generated by Slack Archivist from a conversation in the Camunda Platform 8 Slack, a source of valuable discussions on Camunda 8 (get an invite). Someone in the Slack thought this was worth sharing!

If this post answered a question for you, hit the Like button - we use that to assess which posts to put into docs.