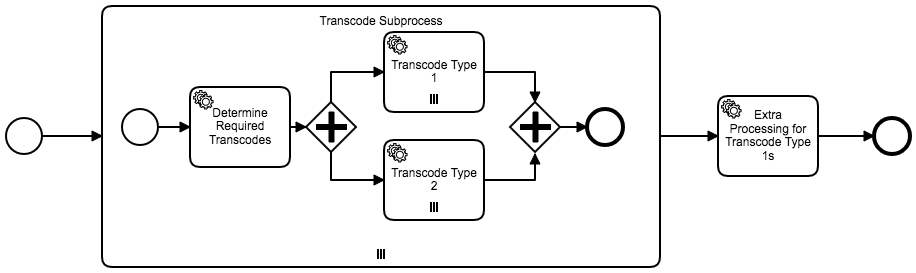

I’ve got a workflow that starts with a multi-instance subprocess. When starting the workflow, we know that there will be N instances of the subprocess (each associated with an id) so we just set a “subprocessIds” variable and use that as the multi-instance collection. All good.

Each sub-process instance generates M artifacts (each with an artifactId). Once all subprocesses have completed, I need to kick off another multi-instance task to process the N*M artifacts that were produced, but I’m unclear on the best way to populate the Collection variable used by this task.

I have tried setting an “end” execution listener on the sub-process which queries the database and sets an “artifactIds” variable with the results. This works, but appears to run once for each sub-process execution, rather than when all subprocess executions have completed. This is ok in this case, since the last execution will get the full list from the database, but it’s just a bunch of needless querying since I only care about the last one.

It’s also simple enough to create a JavaDelegate-based Task whose sole job is to query the database and set the variable for consumption by the downstream multi-instance task. Having to explicitly model this in the workflow just to set the variable seems like overkill, though. I’m currently leaning towards this solution though as it’s pretty explicit about what’s going on and doesn’t run a whole bunch of extra times.

Is there a better way to deal with multi-instance tasks where the “collection” needs to come from a database query?